How Kleinanzeigen Leverages Astro to Power Germany’s Largest Online Classifieds Marketplace

The leader in sustainable trade built a modern data platform from the ground up, refactoring complex dbt workflows and orchestrating 2 PB of data with Astro

>2 PB

600+

<6 months

The Customer

Kleinanzeigen is Germany’s largest online classifieds platform, where people buy and sell a wide range of items, giving used products a second life and helping reduce waste. With more than 30 million monthly customers and more than 55 million active listings, the platform enables sustainable trade at massive scale across categories ranging from consumer goods to real estate. Kleinanzeigen’s lean data engineering team plays a vital role in optimizing marketplace operations and enhancing advertising insights.

The Challenge

Kleinanzeigen had the opportunity to build a modern data platform from the ground up. Starting with a blank page, the team needed to design a foundation that could scale to power advertising insights and marketplace operations for millions of users.

The team faced a dual challenge: simultaneously stand up a new modern stack and meet fast-moving business demands. “We had to migrate everything while still keeping the business running. It was a foundational rebuild on a tight timeline,” says Maxim Hammer, Senior Data Architect.

The Solution

The team evaluated orchestration options with the goal of embedding speed, reliability, and governance into their platform from day one:

“We briefly looked into OSS Airflow and MWAA, but Astronomer offered more of the features that matched our requirements and helped us move quickly on our tight timeline.” Maxim Hammer Senior Data Architect

The team ultimately selected Astro to power orchestration across their new data stack – built intentionally on AWS, Databricks, and Unity Catalog. They prioritized standardization and self-service, ensuring a streamlined developer experience while maintaining robust controls and governance

Rather than simple migrations, the team refactored 100+ complex transformation DAGs – primarily data workflows orchestrated with Cosmos and tightly integrated with Databricks Unity Catalog. In just six months, they migrated these DAGs and more than 600 production tables while ensuring uninterrupted marketplace operations. The migration spanned datasets supporting more than 2 PB of production data.

“Astro lets us stay focused on our core mission – building a reliable data platform – not running infrastructure.” Maxim Hammer Senior Data Architect

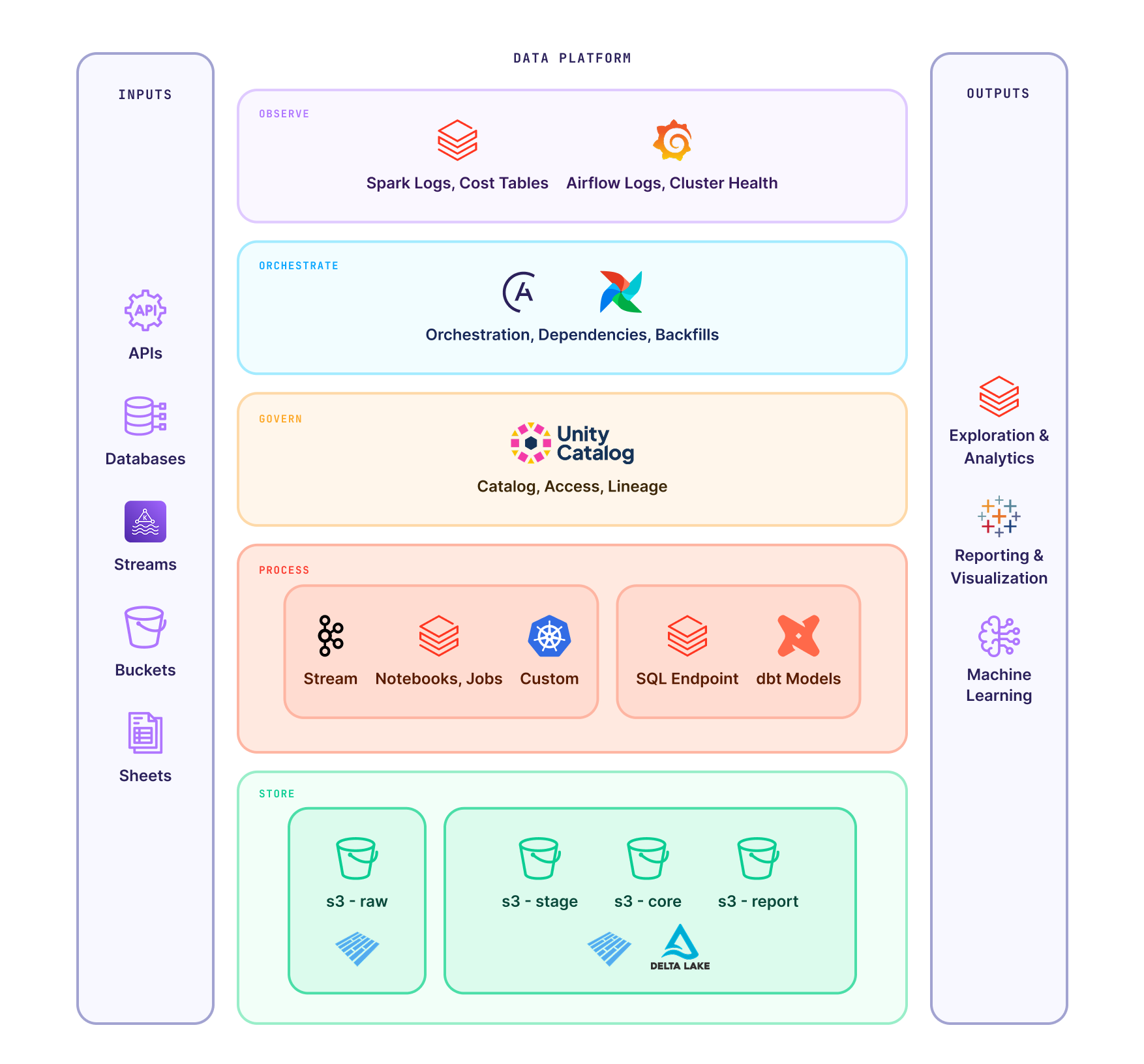

High Level Kleinanzeigen Data Platform Architecture

High Level Kleinanzeigen Data Platform Architecture

Enforcing Standards Across the Data Platform

From the very beginning, the Kleinanzeigen team used Astro to embed standards and guardrails directly into the platform’s DNA. By managing orchestration through Astro, the team could embed best practices directly into their Airflow deployments—ensuring every DAG, schema, and deployment followed consistent, reliable patterns from day one. These standards made the platform approachable for new users and reduced friction as the platform scaled.

The standards cover multiple layers of the platform:

- Pipeline structure: Standard ways to design DAGs, define dependencies, and manage task retries.

- Schema management: Clear rules for creating, versioning, and organizing data schemas, particularly in their Unity Catalog in Databricks and S3 storage.

- Code and config management: Best practices for managing DAG code, dbt projects, and Airflow deployments using GitHub Actions, pre-commit hooks, and CI/CD pipelines.

- Data transformations: Guidelines for dbt models, including naming conventions, partitioning strategies, and materialization patterns.

- Access and permissions: Consistent patterns for setting up secure access to S3 buckets, Databricks catalogs, and secrets through the shared secret manager.

- Monitoring and alerting: Standard use of Airflow features like failure callbacks, logging, and data intervals, ensuring observability is built in by default.

By setting clear expectations and automating compliance with these patterns, the team made it easier for data engineers, analysts, and product teams to contribute confidently to the platform.

“Astro gave us the framework to bake in best practices from day one—everything from how we structure DAGs to how we manage schemas and permissions. Standards make the platform easy to use and hard to break.” Jonas Kernebeck Senior Data Engineer

Leveraging Cosmos to Scale dbt Orchestration

Cosmos, Astronomer’s open-source library for orchestrating dbt projects with Airflow, plays a critical role in making Kleinanzeigen’s orchestration scalable and maintainable. Cosmos bridges the gap between dbt and Airflow by automatically converting dbt models into granular Airflow DAGs. It dynamically parses dbt manifests and translates them into Airflow task groups, allowing for model-level observability, flexible retries for only failed tasks, and seamless orchestration with Airflow’s scheduling and monitoring capabilities. This enables the Kleinanzeigen team to:

- Achieve fine-grained observability into individual dbt models and track their execution status in Airflow.

- Simplify debugging, allowing engineers to pinpoint and resolve issues at the model level without rerunning entire workflows.

- Integrate seamlessly with CI/CD pipelines, supporting continuous deployment of dbt projects alongside Airflow DAGs.

- Ensure consistent use of Airflow features, such as data interval handling and alerting, across all dbt workloads.

The Kleinanzeigen team uses Cosmos to orchestrate a broad range of dbt transformations on Databricks, benefiting from Airflow’s orchestration flexibility while maintaining clarity and structure in their workflows. These practices enabled rapid adoption of best practices across the organization and laid the groundwork for scalable data operations.

“Cosmos gave us granular visibility into dbt models and let us reuse Airflow’s features like data intervals and alerting. That made our dbt workflows much easier to operate at scale.” Thibault Latrace Senior Data Engineer

Keeping an Eye on Costs with Astro

As Kleinanzeigen’s data platform grew, so did the need to monitor and manage infrastructure costs across their stack. The team built a custom “Costs DAG” that automatically aggregates usage data from Astronomer, Databricks, AWS Cost Explorer, and system billing tables.

The Costs DAG consolidates this data into a single source of truth, giving the team visibility into which domains, environments, and users are driving spend. With this insight, they can:

- Track usage and costs across all major data platform components.

- Proactively identify cost spikes and anomalies.

- Drive accountability by surfacing usage patterns to domain teams.

The Kleinanzeigen team continues to refine this approach, aiming to make cost transparency a first-class part of their platform operations.

“Before, cost was a black box. Now we can break it down by domain, user, or environment and actually see where spend is coming from. The Cost DAG we built in Astro gave us that visibility.” Maxim Hammer Senior Data Architect

The Results

Kleinanzeigen now operates a modern, scalable data platform that empowers teams to generate advertising insights and optimize marketplace performance with greater speed and reliability. Engineers focus on business logic, not infrastructure, improving agility and reducing time to insight. Key outcomes include:

- A 6-month migration of the full production workload to Astro, meeting critical business deadlines.

- Rapid deployment of new pipelines, including cost tracking, automated cleanup of stale tables, and robust data quality checks.

- Improved developer experience with a fully reproducible local Airflow setup, shared libraries, enforced standards, and automated testing for pipelines.

- Strong governance through standardized data layers, permission management, and automated alerting on DAG failures.

“With Astro, we stopped firefighting infrastructure and focused on delivering the data products our business depends on.” Maxim Hammer Senior Data Architect

What’s Next

Kleinanzeigen is continuing to build on their foundational data platform work, deepening their investment in clear data pipeline and dataset ownership models, as well as automated testing and data modeling standards to support their growing user base and data footprint. As more teams adopt the platform, they are focused on making it easier for domain owners to take responsibility for their own data pipelines – without sacrificing governance or quality.

The data engineering team is also working to make their platform even more business-friendly by improving how product and advertising teams discover and use key datasets. This includes simplifying data discovery in the catalog, making dashboards and pipelines easier to find and understand, and streamlining access controls so teams can confidently use the data they need without waiting on engineering support. These efforts aim to reduce friction between engineering and business users and ensure that high-quality, trustworthy data is available for day-to-day decision-making.

“We’re building a platform where domain teams can own their data end-to-end, but still feel confident that they’re doing things the right way—because we’ve embedded those guardrails directly into Astro.” Maxim Hammer Senior Data Architect

Learn What Astronomer Can Do For You

Kleinanzeigen designed its data platform from the ground up with Astro – embedding orchestration, governance, and scale from day one. See how you can do the same.